The National Forum on Youth Violence Prevention

Kathleen Tomberg and Jeffrey A. Butts

![]()

![]() This project was supported by Grant No. 2010-MU-FX-0007 awarded by the Office of Juvenile Justice and Delinquency Prevention, Office of Justice Programs, U.S. Department of Justice. Points of view expressed in this document are those of the authors and do not necessarily represent the official positions or policies of OJJDP or the U.S. Department of Justice.

This project was supported by Grant No. 2010-MU-FX-0007 awarded by the Office of Juvenile Justice and Delinquency Prevention, Office of Justice Programs, U.S. Department of Justice. Points of view expressed in this document are those of the authors and do not necessarily represent the official positions or policies of OJJDP or the U.S. Department of Justice.

INTRODUCTION

In 2012, the Research and Evaluation Center at John Jay College began to publish the results of an assessment conducted between Summer 2011 and Summer 2012. The project measured the effectiveness of the National Forum on Youth Violence Prevention.

In 2012, the Research and Evaluation Center at John Jay College began to publish the results of an assessment conducted between Summer 2011 and Summer 2012. The project measured the effectiveness of the National Forum on Youth Violence Prevention.

The findings suggested that the initiative was generating important changes in five communities participating in the National Forum (Boston, MA; Detroit, MI; Memphis, TN; Salinas, CA; and San Jose, CA). Survey respondents reported a number of potentially valuable outcomes, including expanded opportunities for youth, improvements in the extent of inter-agency and cross-sector collaborations, and successful efforts to draw upon the knowledge and expertise of a broad range of community members. According to survey respondents, the National Forum cities were developing stronger capacities to reduce youth violence.

The findings suggested that the initiative was generating important changes in five communities participating in the National Forum (Boston, MA; Detroit, MI; Memphis, TN; Salinas, CA; and San Jose, CA). Survey respondents reported a number of potentially valuable outcomes, including expanded opportunities for youth, improvements in the extent of inter-agency and cross-sector collaborations, and successful efforts to draw upon the knowledge and expertise of a broad range of community members. According to survey respondents, the National Forum cities were developing stronger capacities to reduce youth violence.

In 2016, with the support of the Office of Juvenile Justice and Delinquency Prevention, the John Jay research team launched a new iteration of the same survey in all 15 cities then involved in the National Forum. The respondents in the new survey were positive about their growing collaborations and the effectiveness of their strategies for preventing youth violence. As with the previous surveys, the 2016 survey measured the perceptions of community leaders. It was not a direct measure of youth violence.

METHOD

In 2016, the Research and Evaluation Center sent surveys about violence prevention efforts to public officials and their local partners in 15 cities participating in the National Forum on Youth Violence Prevention. The survey followed the same methodology used in the original three waves of survey administration in 2011 and 2012. The research team contacted the site coordinators in each Forum city and asked for help identifying 20 to 40 key stakeholders to serve as survey informants. In theory, each list contained the entire population of stakeholders in any given Forum city, rather than a sample of all possible informants. As in the earlier surveys, this assumption allowed the research team to incorporate a finite population correction in analysis to produce smaller margins of error (Rao and Scott 1981).*

* Rao, J.N.K. and A.J. Scott (1981). The analysis of categorical data from complex sample surveys: Chi-squared tests for goodness of fit and independence in two-way tables. Journal of the American Statistical Association, 76, 374: 221-230.

Ideal survey participants were people informed about the overall effectiveness of local law enforcement, youth services, and the adequacy of violence prevention efforts in their city as a whole. Each list typically included judges, police officers, educators, substance abuse and mental health treatment professionals, community activists and organizers, members of faith-based organizations, and youth advocates. Site Coordinators were told that survey participants did not have to have ever attended a National Forum-related meeting or even be aware of the city’s Forum efforts. The 2016 survey did not specifically target the same individuals as the 2011-2012 surveys, though some individuals may have been the same across the four year gap.

Sample Characteristics

In the original three survey iterations, overall response rates ranged between 64 percent at the first wave to 50 percent by the third wave. The lower response rates at each successive survey administration were not unexpected, as the novelty of any survey diminishes over time and the same individuals were invited to complete the survey each time.

In the 2016 survey, the research team benefited from the strong support of Forum site coordinators in many of the cities and the project was able to achieve an overall percent response rate of 68 percent among the nearly 500 individuals invited to complete the survey (Figure 1). The total 2016 response rate among only the five cities included in the earlier study was 72 percent. Boston achieved the highest response rate in every wave of the survey, from 2011 to 2016.

Respondents in each administration of the survey were similar in terms of age, sex, race, and ethnicity. The majority of respondents were between 40 and 59 years of age (e.g., 62% in Summer 2012 and 60% in 2016) and approximately half were male (49% in Summer 2012 and in 2016).

The racial/ethnic composition of the sample fluctuated more between survey administrations. Almost half of the respondents in Summer 2011 (45%) and Summer 2012 (44%) were Caucasian, but just over a third of respondents in Winter 2012 (35%) and 2016 (38%) were Caucasian. A majority of Winter 2012 and 2016 respondents were either African-American (39% for both iterations) or Hispanic/ Latino (14% in Winter 2012 and 8% in 2016).

Respondents varied in occupational affiliation (Figure 2). The survey asked each respondent to indicate his or her professional or occupational role as it related to their expertise in youth violence prevention. Respondents were given a list of more than a dozen possible roles from which to choose, as well as an opportunity to write in other categories. Researchers collapsed the various answers into 4 large groups: justice system; social services; education; and other allied sectors. The 2016 respondents were primarily in social services (33%) or other allied sectors (33%). There was more variation within individual cities, although no single sector ever made up more than 60 percent of a city’s respondents.

Survey Items

The assessment team’s survey tracked how respondents in each city viewed the effectiveness of their community’s violence prevention strategies and whether those strategies changed over time, as intended by the National Forum. The 2016 survey included 98 items, a subset of the original 144 items from the 2011-2012 survey. Items that were not statistically useful in the original 2012 analysis were eliminated from the 2016 survey. Survey items measured the perceptions, opinions, and attitudes of stakeholders in each community, as well as changes in policies, the leadership dynamics, and the improvements in organizational relationships that were hypothesized by the National Forum to produce positive effects on public safety. As in the original 2011-2012 surveys, most items were developed from existing scales and instruments and then combined in a new tool created specifically for this assessment.

The assessment team’s survey tracked how respondents in each city viewed the effectiveness of their community’s violence prevention strategies and whether those strategies changed over time, as intended by the National Forum. The 2016 survey included 98 items, a subset of the original 144 items from the 2011-2012 survey. Items that were not statistically useful in the original 2012 analysis were eliminated from the 2016 survey. Survey items measured the perceptions, opinions, and attitudes of stakeholders in each community, as well as changes in policies, the leadership dynamics, and the improvements in organizational relationships that were hypothesized by the National Forum to produce positive effects on public safety. As in the original 2011-2012 surveys, most items were developed from existing scales and instruments and then combined in a new tool created specifically for this assessment.

The total items completed in the surveys varied by respondent group. Items designed to measure general perceptions and attitudes about the prevention of youth violence were administered to the total sample. Other items required respondents to have informed opinions about the National Forum strategy and process. These items were provided only to the subset of respondents answering positively to an earlier question about their previous awareness of the National Forum. In the 2016 survey, almost three-quarters (73%) of all respondents indicated that they had been “aware” of the National Forum prior to receiving the survey. A third set of survey items measured the perceptions of an even smaller set of respondents—those who were not only aware of the National Forum, but who were directly involved in it. In the 2016 survey, almost half of all respondents (44%) indicated through their answers that they were “involved” in the National Forum.

| Factor Analysis To simplify the task of analyzing the dozens of items in each survey, the research team conducted a series of exploratory and confirmatory factor analyses on the Summer 2011 survey data. This analysis examined 99 items and combined them into multi-variable “factors.” The preliminary factor structure based on Summer 2011 data was refined and revised using the survey data from Winter 2012. The third and fourth rounds of surveys in Summer 2012 and 2016 were analyzed using the previously established factor structure. (All survey items and factors with reliability coefficients are available in an appendix.) The research team performed an exploratory factor analysis on the survey items answered by all respondents in Summer 2011, and extracted seven factors using principal components methods. The results suggested that three of the original factors could be retained for subsequent analysis, as they explained more than 50 percent of the total variance of the included items and they remained stable in the Winter 2012 survey. A second series of confirmatory factor analyses tested a priori constructs related to the goals and strategies being pursued by the cities involved in the National Forum. Across 10 confirmatory factor analyses, an additional 18 factors emerged, of which 12 performed well enough to be retained in subsequent analyses. Retention criteria included a sufficiently high reliability coefficient (Cronbach’s α) and reasonable stability from the Summer 2011 to Winter 2012 surveys. All 15 factors retained from both types of factor analysis were rotated using the promax method. An item was said to have loaded on a factor if its loading was greater than .40. If any items loaded onto more than one factor at greater than .40, the item was not retained within one of those factors. Of the original 99 items included in the factor analysis, 21 did not load significantly on any factor and were set aside for separate analysis. These 21 items were dropped entirely from the 2016 iteration of the survey. |

RESULTS

The research team at John Jay College relied on stakeholder surveys to track perceptions and opinions in each community involved in the National Forum on Youth Violence Prevention. Fifteen months elapsed between the Summer 2011 and Summer 2012 surveys and 3.5 years elapsed before administration of the Winter 2016 survey. With each city having varying lengths of time of their involvement in the National Forum, the research team had no expectations of consistent outcomes. It was encouraging, therefore, to find that cities involved in the National Forum seemed to exhibit sustained improvements on a number of key indicators from the 2012 report.

General Perceptions of the National Forum

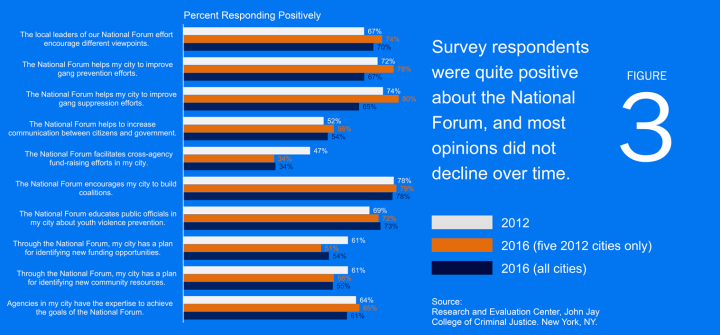

One of the first concepts explored by the assessment team was the extent to which participant cities supported the efforts of the National Forum itself (Figure 3). In other words, did individuals believe that the cities were benefiting by participating in the Forum, and did conditions in their communities seem to be improving? The responses overall were quite positive, and did not change appreciably between the Summer 2012 and 2016 surveys. In general, residents of National Forum cities supported the efforts of the Forum. When responses of individuals from the original five cities were compared over the entire time period, their 2016 responses were generally more positive on all items with the exception of three questions dealing with funding and resources. This suggests the National Forum may have inspired sustainable changes in how participating cities deal with youth violence.

Indicators of Change

The 2012 National Forum report found many promising indicators of positive change when data from the 2011-2012 surveys were examined across all of the National Forum cities (Figure 4). These same indicators were examined in the 2016 survey as well and the new data from the same five cities was added to the original 2012 graphs. In 2016, the percentage of respondents who believed youth violence was worse in their city than in similarly sized cities was beginning to decrease, while there was a small uptick in the percentage of respondents reporting a less serious youth violence problem in their city compared with others. When asked to consider youth violence in their communities today compared with a “few years ago,” for example, the percentage of respondents believing that today’s level of violence was “more serious” declined from 62 percent to 49 percent in the 2016 survey.

Perceptions of gun violence seem to be worse in 2016 than they were in 2012. However, perceived levels of violence associated with drug sales, organized gangs, and family conflict all improved between the 2011 and 2012 surveys, and those changes were generally sustained in the 2016 survey.

Positive perceptions of law enforcement were sustained between 2012 and 2016. In the first survey, 49 percent of all respondents believed that law enforcement efforts focused on youth violence had increased in the previous year. By the third and fourth survey, this perception was shared by 57 and 56 percent of all respondents, respectively (Figure 5).

There was sustained movement in respondents’ perceptions of gang activity. In 2012, 33 percent believed that gang activity had become more visible in their community, compared with 46 percent in 2011. By 2016, only 15 percent of all respondents reported an increase in the visibility of gang activity and 32 percent of respondents were reporting a decrease in visible gang activity.

The striking improvements in services and support for youth and families observed in 2011-2012 continued in 2016. Respondents reported increasing numbers of youth able to participate in supervised activities, better availability of social services, and larger numbers of young people able to enroll in school. Improvements in collaboration between law enforcement and schools were maintained from 2012 to 2016.

Assessing the effects of the National Forum by examining almost 100 survey items across four time periods and in 15 different cities would quickly become unwieldy. Instead, the research team calculated 15 summary factor scores for each respondent. The number of items in each factor varied, ranging from just two items to as many as 10 items. Researchers first standardized the responses by converting each item to a scale in which higher values reflect more positive opinions. Only valid items were used in the calculation of mean scores. In other words, if an individual respondent completed only nine out of the 10 items on a particular factor, his or her mean score for that factor would be based on just the nine valid responses.

Researchers computed a reliability coefficient (Cronbach’s a) for each factor to assess the inter-item reliability of the score, or the extent to which the analysis could treat the group of variables as one measure. The coefficients may be interpreted as a measure of the total correlation among all of the items that make up a factor. Higher values (i.e. those approaching 1.0) indicate greater internal consistency for the factor. The 2016 alpha coefficients for the factors used in the assessment project indicated acceptable reliabilities, ranging from .633 to 1.00. Each factor’s reliability score remained relatively consistent across each survey iteration (varying up to 0.287).

Once each individual’s score was calculated for all factors, group means were calculated. These included the total group mean for all respondents, as well as group means by city. Researchers conducted a series of independent-sample t-tests to assess changes in scores between survey iterations, both for the entire respondent sample as well as within each city. A t-test is used to determine whether the change in a group mean between 2011 and 2016 was significantly different from zero, or from no change at all. A probability level of (p < 0.10) is used as the threshold to signify that a difference in means is, in fact, statistically significant and not likely to be the result of chance variation alone.

After all the t-tests were completed, the finite population correction (described above) was applied to adjust the variances and to determine which changes in mean scores were significant for each sample of respondents. Again, the finite population correction was employed because each city’s survey sample was created under the assumption that there is a finite number of ideal informants in each city, rather than an infinite population of people who could complete the survey. Separate finite population corrections were applied to the sample as a whole and to a subset of the sample containing respondents from the five cities involved in the original 2012 report. The analysis focused on changes in the five National Forum cities included in the 2012 report as these comparisons demonstrate how respondents in the continuously involved Forum cities may have changed their perceptions over time.

The strongest indications of improvement may be those factor scores that showed a statistically significant increase between 2011 and 2016. Of the factor scores made up of items completed by all participants, there was a significant increase among the five cities combined in perceptions of Organizational Collaboration (4.17 to 4.21), Opportunities for Youth (2.72 to 3.01), Law Enforcement Efficacy (3.67 to 3.78), and Decrease in Perceived Violence (2.50 to 2.74) between 2011 and 2016 (Figure 6). Opportunities for Youth increased the most, suggesting that cities were becoming better able to connect youth with services, recreational activities, educational opportunities, and jobs.

Of the factor scores made up of items completed by only those participants aware of the National Forum, there was a significant positive increase in Community Expertise (3.58 to 3.80), Multidisciplinary Focus (3.47 to 3.53), and Personal Commitment (4.33 to 4.41) between 2011 and 2016. Community Expertise increased the most, suggesting that agencies and organizations may have become better at organizing their resources to strengthen prevention.

Comparing City Strengths

A city’s highest scoring factor may indicate where residents of a city believe they are doing the best in efforts to prevent and reduce violence (Figure 7). One factor was in the top three for every city – Personal Commitment to the National Forum. This factor measured how important the National Forum’s efforts were to respondents and how likely they were to remain engaged in the work of the Forum. In 10 of the 15 cities, this factor received the highest score, while it scored second highest in one city, and third highest in three cities. Thus, despite how cities scored on perceptions of violence and the efficacy of the strategies promoted by the National Forum, individual members of these city networks remain committed to the work and believe that Forum efforts are helping them succeed.

Organizational Collaboration was the next most consistently high ranking factor among the cities, with 11 cities having this factor in their top three. This factor was designed to measure how well a city’s organizations work together on violence prevention efforts and support each other through data and resource sharing. Agency Commitment was the only other factor to have a majority of cities rank it in their top three. This factor measured each city’s perception of the importance of being a part of the National Forum to enhance violence prevention work and to facilitate collaboration. Nine cities scored this factor high enough to place it in the top three.

CONCLUSION

The surveys described above do not constitute a rigorous evaluation of the National Forum on Youth Violence Prevention, but they accurately capture the perceptions and attitudes of the individuals most involved in violence prevention efforts in each city participating in the National Forum. Surveys measure perceptions of change, of course, and not actual change. Assessing the effects of a city’s violence reduction efforts would require measurements that track the real incidence of youth violence over time. Any city committed to reducing youth violence should track multiple indicators, including some constructed from police data and others from community agencies as well as self-reported data from youth, families and community residents. As a complement to such indicators, however, a repeated survey of informed system actors and justice professionals is a valuable source of information.

The findings from this study suggest that the National Forum provides meaningful assistance to cities. The organizational networks involved in the National Forum appear to be moving in positive directions and the individuals involved in those networks report high levels of confidence that they are making a difference. Respondents in all 15 cities exhibit a strong commitment to the Forum and generally believe that their communities are stronger as a result of their participation. These results suggest that National Forum cities benefit by developing greater opportunities for youth, more effective violence prevention approaches, improved perceptions of law enforcement, and a broader engagement of community members.

_______________________________

Copyright by the Research and Evaluation Center at John Jay College of Criminal Justice, City University of New York (CUNY).

John Jay College of Criminal Justice

524 59th Street, Suite 605BMW

New York,NY 10019

http://www.JohnJayREC.nyc

June 2016

This report was prepared with support from the Office of Juvenile Justice and Delinquency Prevention (OJJDP), part of the U.S. Department of Justice and its Office of Justice Programs (OJP), participants in the federal partnership responsible for launching the National Forum on Youth Violence Prevention. The authors are grateful to the leadership of OJJDP and the other federal partners for their guidance and support during the development of this report and the National Forum assessment of which it is a part. The authors are also grateful to the violence prevention leaders in each of the National Forum cities who helped compile the lists of survey respondents that generated the cross-city comparison data presented in the report. Without their efforts, this report would not exist. Finally, the design and execution of a complicated survey project requires the efforts of many people. The authors are very grateful for the support and advice of the Office for the Advancement of Research and the Office of Sponsored Programs at John Jay College of Criminal Justice, as well as assistance received from current and former colleagues from the Research and Evaluation Center: Emily Pelletier, Kevin Wolff, Rhoda Ramdeen, and Jennifer Peirce.

Recommended Citation

Tomberg, Kathleen and Jeffrey A. Butts (2016). Durable Collaborations: The National Forum on Youth Violence Prevention. New York, NY: Research and Evaluation Center, John Jay College of Criminal Justice, City University of New York.